Chalmers' ambition is to become one of Europe's top academic environments by 2041 and as such contribute even more Sweden's international competitiveness and to the transition towards a sustainable society. Our research spans over our 13 departments and is published in about 3 000 scientific articles each year.

Selected research areas

These research areas bring together expertise from several departments, and are focused on global societal challenges.

Energy

For a transition to sustainable energy systems and a common understanding of long-term challenges.

Health Engineering

Sustainable solutions to major health-related societal challenges, in close collaboration with the healthcare sector.

ICT, digitalisation and AI

Aiming for a smarter, safer society.

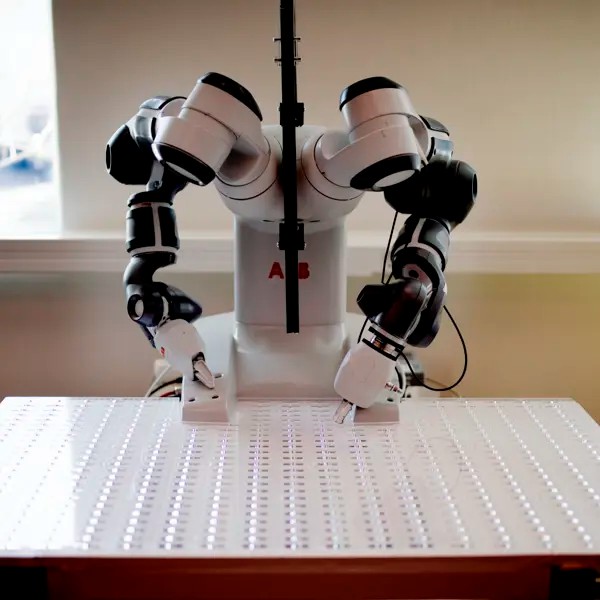

Industry and production

Sustainable industry through the transition to a circular economy, industrial digitisation and the use of new materials.

Land

With a growing population, the need increases for well-planned and efficient communities with access to clean water and sanitation, more but also climate-adapted housing, and resilient infrastructure for water, energy, and transportation, as well as sustainable agriculture and forestry.

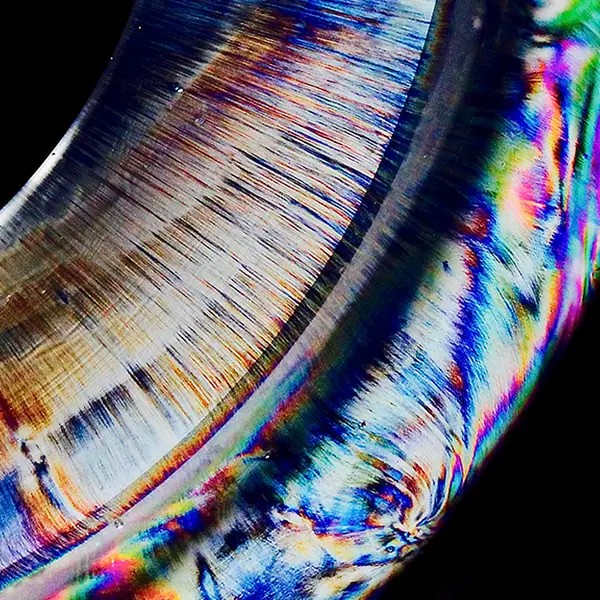

Materials science

We enable the green transition through excellent materials science in energy, health, construction and transport.

Nano science and quantum technology

Research into the unique physical, chemical and biological phenomena that prevail at very small dimensions, and the technologies that utilise these phenomena.

Ocean

Our strong maritime heritage goes back to the establishment of the university. Today, most departments have research, education, and or utilisation related to the marine and maritime domain, contributing to the grand challenges associated with human utilisation of the oceans and marine resources. All in line with the UN Decade of Ocean Science for Sustainable Development.

Space

A sustainable approach to space technology meeting societal and scientific challenges now and in the future, on Earth and beyond.